What is Apache Hadoop?

Apache Hadoop is an open-source software framework used for distributed storage and processing of big data sets using the MapReduce, programming model. It consists of computer clusters built from commodity hardware. There is a fundamental assumption while designing Hadoop that hardware failures are common and the framework should take care of handling them. The platform came recently into discussions again due to the release of Apache Ranger.

The Hadoop platform consists of Hadoop Distributed File System (HDFS) storage part and a processing part which is a MapReduce programming model. It splits the files into large blocks and distributes them across different nodes in a cluster. It then transfers packaged code into nodes to process the data in parallel. This approach takes advantage of data locality nodes manipulating the data they have access to allow the faster and more efficient dataset processing. The new release will increase the freelance jobs online in this field.

The base Apache Hadoop framework:

- Hadoop Common contains libraries and utilities needed by other Hadoop modules

- The HDFS stores data on commodity machines, providing very high aggregate bandwidth across the cluster

- YARN is the resource-management platform for managing the resources in clusters and using them for scheduling user applications

- An implementation of the MapReduce programming model for large scale data processing

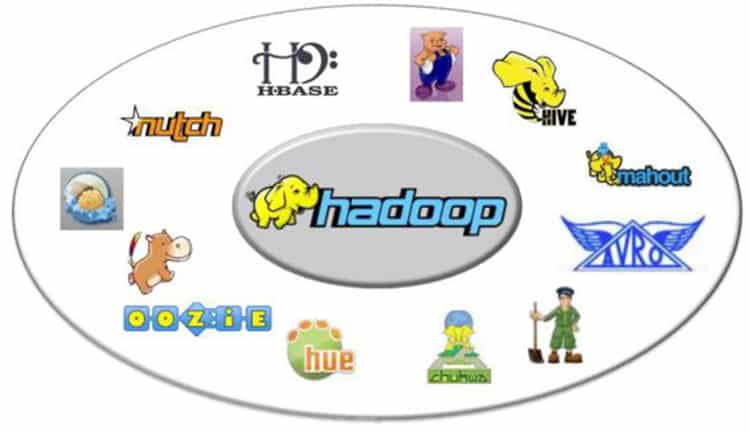

Hadoop is not just the base modules mentioned above, but also the ecosystem, or collection of additional software packages related to Hadoop.

The Apache Ranger:

It is a framework to enable, monitor and manage data security across the Hadoop platform. It currently provides a centralised security administration, access control and detailed auditing for user access within the Hadoop, Hive, HBase and other Apache components.

This Framework has the vision to provide comprehensive security across the Apache Hadoop ecosystem. Because of Apache YARN, the Hadoop platform can now support a true data lake architecture. The data security within Hadoop needs to evolve to support multiple use cases for data access while providing a framework for the central administration of security policies and monitoring of user access.

Framework Goals:

- Provide security administration to manage all tasks in a central UI or using REST APIs.

- Fine-grained authorisation to do a specific action and operation with Hadoop component/tool and managed through a central administration tool

- Standardise authorisation method across all Hadoop components.

- Improved support for various authorisation methods like Role-based access control, attribute based access control,

- To provide centralises auditing of user access and administrative actions within all the components of Hadoop.

Apache Ranger Features you need to know:

- Offers a centralised security framework to manage fine-grained access control over Hadoop and related components. Using the Administration Console, users can manage policies around accessing a resource for specific users and groups and enforce the policies within Hadoop. The users can enable tracking and policy analytics for a good control of the environment. It provides ability to delegate administration of certain data to other group owners, with the intention of decentralising data ownership

- It has a centralised web application, which consists of the policy administration, audit and reporting modules. The authorised users will be able to manage security policies using the web tools or APIs. These security policies are enforced within Hadoop ecosystem using lightweight Ranger Java plugins. So, there is no additional OS level process to manage.

- It provides a plugin for Apache Hadoop, specifically for the NameNode as part of the authorisation method. The Apache Ranger plugin is in the path of the user request and can make a decision on whether the user request should be authorised. The plugin also collects access request details required for auditing

- It enforces the security policies already available in your policy database. The users can create a security policy for a specific set of resources and assign permissions to the specific groups. The security policies are stored in the policy manager and are independent of native permissions.

- It also enforces an authorisation, based on policies entered into the policy administration tool. It has another feature to validate access using native Hadoop file-level permissions if the Ranger policies do not cover the necessary access.

- The Apache Ranger plugin for Hadoop is only needed in the NameNode and not in each datanode.

Apache Ranger is now a TLP:

On 8th Feb 2017, the Apache Software Foundation (ASF) announced that Apache Ranger has moved out from Incubator to become a Top-Level Project (TLP). It means that the project’s community and products have been well-governed under the meritocratic process and principles.

The latest addition to the Hadoop family, Apache Ranger offers very comprehensive security coverage, with native support for multiple Apache projects, including Atlas, HBase, HDFS, Hive, Knox, NiFi, Solr, Kafka, Storm, and YARN.

Apache Ranger provides an easy and effective way to set access control policies and audit the data access across the entire Hadoop stack by following industry best practices. A key benefit of Ranger is that access control policies can be managed by security administrators consistently across Hadoop environment. Ranger also enables the community to add new systems for authorisation even outside Hadoop ecosystem, with a robust plugin architecture, that can extend with minimal effort.

This Software is released under the Apache License v2.0 and is supervised by a team of active contributors to the project. The Project Management Committee guides the Project’s day-to-day operations, including community development and product releases.

Apache Ranger provides many advanced features like:

- Ranger Key Management Service (compatible with KMS API)

- Dynamic column masking and row filtering;

- Dynamic policy conditions

- User context enriches

- Classification or tag based policies for Hadoop ecosystem components via integration with Apache Atlas.

Summary

Apache Hadoop is a stable, well-known platform, and it has hundreds of products and tools in the incubator. An addition to the released tools is Apache Ranger framework, which can help you manage the data security across the Hadoop platform in a better way. Apache is also asking for feedback on this platform, and if you are a regular Hadoop user or doing freelance work, it is the time to evaluate and provide feedback to enhance the platform.